How much do you know about the clones (apart from Star Wars stuff)? Chances are, if you ever participated in team effort developing software, you came across duplicate code, in most cases resulting from Copy/Paste magick. The best solution for finding duplicates in code is still manual code review – trusty efficient but time-consuming method. But one frequently wishes for an automated tool for that – and that’s where Clone Detective comes in. This is very interesting free tool that become available at CodePlex about a month ago and it purports to assist developers in identifying duplicate code in C# projects.

What is so exciting about this specific tool? Is that a first tool of its kind? Well, there are clone detection tools on the market; most are Java-oriented but some would work for more than one language. However, the tools I have tried were a) pretty clunky to operate, b) not integrated with IDE and c) cost money based on weird licenses (based on LOC processed etc.). Clone Detective on the other hand is a) easy to run, b) reasonably well integrated into VS and c) free. Thus it is very tempting to try!

How does the tool work? Clone Detective is essentially Visual Studio 2008 (no VS2005 support) integration front-end for ConQAT engine - COntinous Quality Assesment Toolkit, academic project by University of Munich. So when you run analysis of your code, Clone Detective will invoke ConQAT engine with the files, and will know how to process and present results in a meaningful way.

As many other academic projects, ConQAT engine is written in Java, which means you must have JRE installed (and JRE version must be higher than 1.5). Make sure you install JRE prior to Clone Detective, otherwise you will have to change the path to Java in tool settings (through “Tools->Options->Clone Detective” menu). This is an interesting twist – installing Java to analyze C# code, but remembering that the tool is free helps overcome the doubts at that stage :)

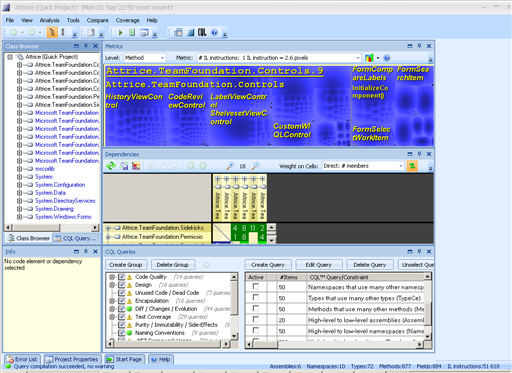

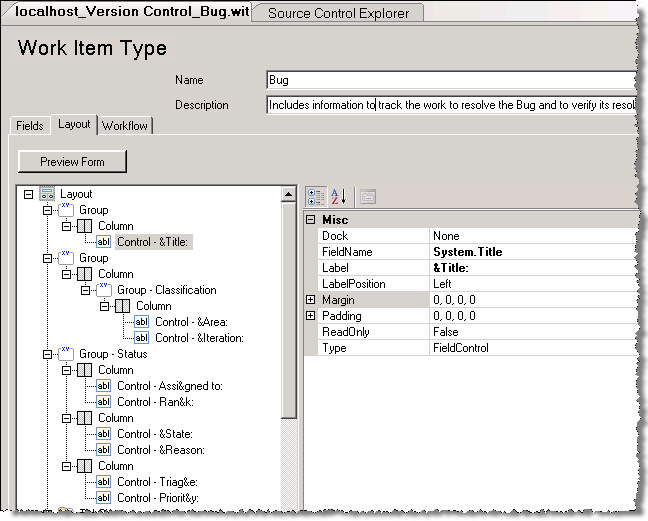

Once Clone Detective package is installed, you will have three additional tool windows available through “View->Other Windows” menu in your VS 2008 IDE: “Clone Explorer”, “Clone Intersections” and “Clone Analysis” tool windows.

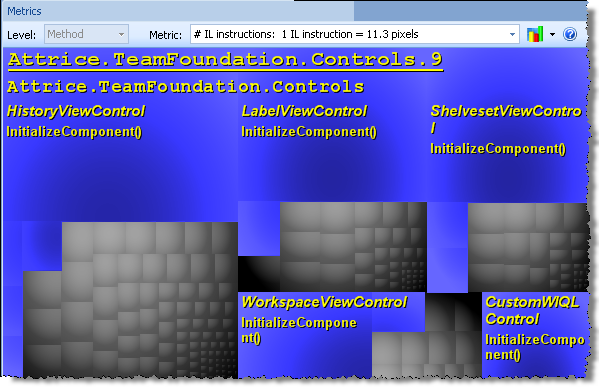

“Clone Explorer” tool window is the main entry point that you will use to run the analysis and review the high-level results of it. The results will consist of list of the files in the solution folder (separated into physical folders), where for each file there is indication of clones found; "Rollup" combo box allows selection of different metrics such as "Clone Percentage", "Number of Cloned Lines" etc.

Double-clicking the file will open it in IDE with the clones detected highlighted with different color on the left margin of document window.

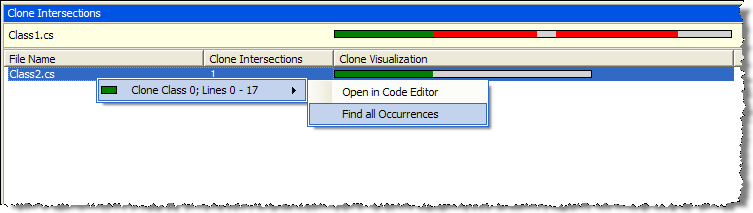

However, for better navigation one would use “Clone Intersection“ window, available by right-clicking on the specific file. This window displays cumulative color map for the clones in specific file on the top, and list of other files that contain clones from the selected file. Using color legend, one may view the distribution of clones within the file/across different files.

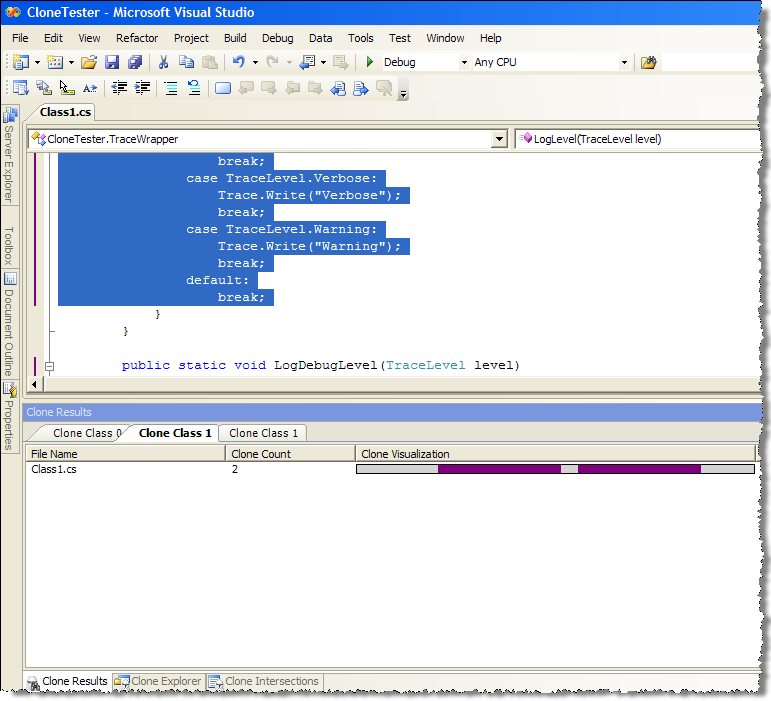

To drill down to specific clone, right-click on the color bar (either one on top or one on the right of the file), and select "Find all occurrences" - that will bring you “Clone Results” tool window to facilitate review of specific clone (double-clicking on the file will bring up IDE window with the focus on the clone and color coding on margin identifying the range of duplicated code).

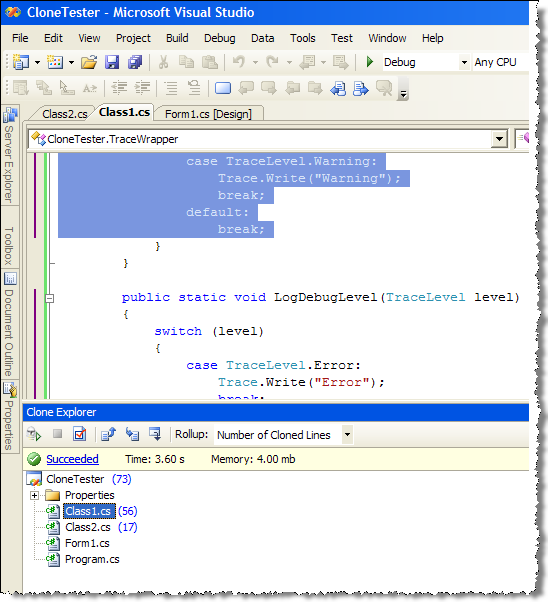

Here is duplicated code found within the same file:

And here is the code duplicated across two files:

In a nutshell, that's the featureset of the tool (I did not mention the configuration, accessible from “Clone Explorer” window, which seems to hint on advanced fine-tuning options available).

One additional important point to be aware of is that the tool runs analysis on all files in the solution folder and all sub-folders and it does not require the files to be compiled; thus you may end up analyzing lots of files you did not want to. But perhaps that can be mended through settings.

To get some feel for how well the tool works I run some ad-hoc tests with Clone Detective, and here are my findings:

- On a basic C# project that I wrote for the purpose of testing, it did very good job identifying all clones that I have created "for the purpose", both in the same file and across two files

- On a real-life project (single assembly of ~ 20K LOC) with obviously bad code with high level of duplicity (due to no OOD principles applied and extensive copy-paste) the tool has identified the problems that were identified from code review. However, the tool was very useful to identify the scope of duplications across the solution, thus providing viable alternative to tedious manual code review. Did not see any false positives.

- On another real-life solution containing five assemblies (~35K LOC) it did considerably worse - run time was around 2 minutes, and it has mistakenly discovered 120K clones in one file of 2K LOC (file contained some static arrays initialization code). Some of other duplicates found were false positives (similar code but not duplicate functionality).

So in terms of success rate, I am still undecided and need to use it more to get certain benchmarks. But for a free tool it was easy to use and delivered on declared functionality (with some caveats).

On the problem side, it appears that running the tool on a large number of files may result in a huge amount of data to analyze; and the number of perceived clones may affect the analysis productivity. Thus integrating it into VS project build to be used throughout the development (a-la code analysis) and continuous build integration flow may be features to consider for the future.

Overall, Clone Detective may be well recommended for the test drive; I believe that it may be useful as part of the code review and overall development process.