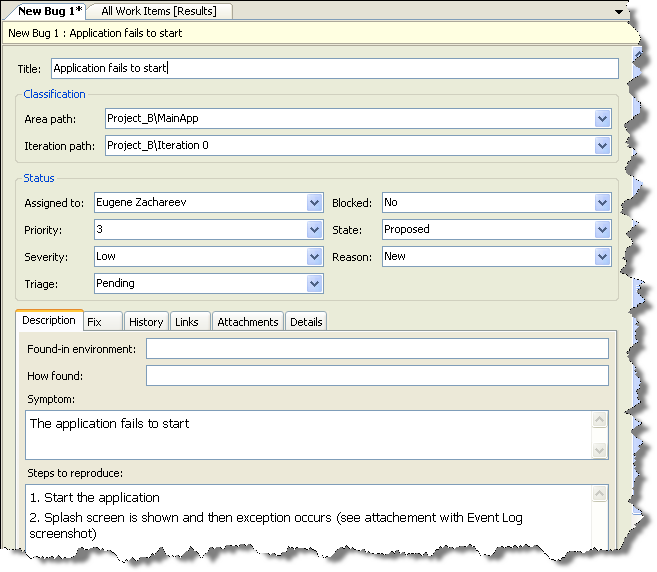

Today I would like to talk about system fields that must be present in every work item.

All of those fields have refname System.XXX and are available for any Microsoft Work Item template (both in CMMI and MSF templates). They represent minimal recommended subset of fields that any custom work item template should contain. Having such common subset allows reusing basic WIQL queries or reports from pre-defined templates with your custom templates.

You will notice that for most of those fields the ability to customize their behavior is extremely limited (except for UI-related properties). This is an important limitation one should be aware of when designing custom templates; usually you will just duplicate logic and definitions available in out-of-the-box WI templates for those fields (and that would be a recommended approach since there are almost no supported behaviors to apply anyway).

Let’s have a look at system fields by the category:

Core fields

Those are the fields that you will never update through UI, and in most cases will use only for WIQL queries or reports

- ID - unique (across TFS Server) ID for the work item, that gets assigned when you create work item

- Team Project - Team Project that work item belongs to

- Work Item Type - Work Item Template name that was used to create a work item

- Rev - work item historical revision (for newly created WI it is 1, and gets incremented with every change)

- Linked artifacts count fields provide number of artifacts linked to the WI. The following fields are available: Attached File Count, External Link Count, Hyper Link Count, Related Link Count

You do not need to define any of those fields in your WI template (with the exception of ID field); they will be available for queries on any WI template you create.

As a footnote, it is worth mentioning that attachments and links do not have corresponding fields; you just place an appropriate control in UI and magically attachments/links become supported. The only fields related to this functionality are custom Count fields mentioned above (that means no linking queries are supported in current version; this is killer feature coming up in the next version of TFS).

Special behavior fields

Those fields have specific behavior associated with them, with this behavior being part of the WI template definition syntax and either having dedicated customization mechanism (e.g. State field) or no customization available (e.g. for History field only UI properties can be changed).

- State – the state field values and behavior are defined in WORKFLOW section; the only general behavior that can be applied to state field is READONLY (i.e. state may be set read-only depending on certain condition, and thus WI status cannot be changed)

- Reason – the reason field values and behavior are also defined in dedicated WORKFLOW section; likewise the only generic field behavior supported is READONLY

- History - the field contains the history of WI (each entry contains all fields changed, timestamp and optional comment). The only usage is to display it in WorkItemLog control; no rules can be defined on this field and basically it is display only

- Area Path - the field represents assigned area. Area path can be displayed only in WorkItemClassification control, and supports only limited subset of generic field behaviors

- Iteration Path - the field represents assigned iteration. Iteration is also may be displayed only in WorkItemClassification control, , and supports only limited subset of generic field rules

Audit fields

The auditing fields are usually updated automatically and are read-only in UI (though this behavior can be customized, generally it is not a good idea):

- Created By/Created Date - the user that created WI and creation date

- Changed By/Changed Date - the last user that changed WI and change date

- History field can be also considered an audit field, but its behavior cannot be modified (history is always updated automatically).

Data fields

The data fields are the fields that are usually present in any work item template, independent of work item purpose. Indeed, any work item will have short/long text description and person responsible for that unit of work:

- Title – work item title

- Description – work item description

- Assigned To – currently assigned user

As a conclusion, it is important to note that those common fields already defined in data warehouse so in addition to WIQL you can perform reports on them without any additional setup.

Related posts:

- Work Item Customization: user interface (part 4)

- Work Item Customization: state transitions (part 3)

- Work Item Customization: conditional field behavior (part 2)

- Work Item Customization: fields definition (part 1)

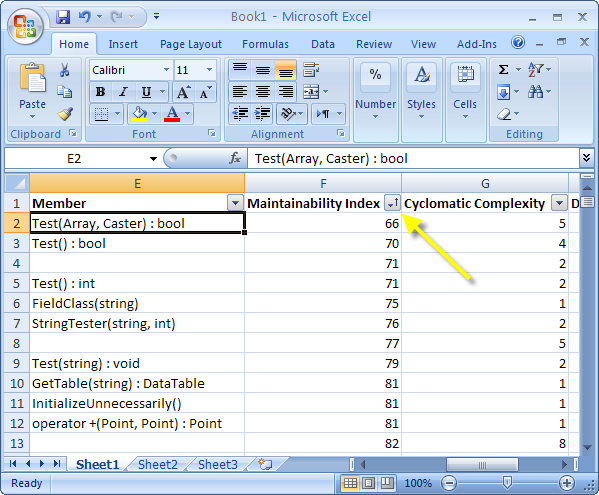

on the Code Metrics window toolbar, and voila! Your results are available in Excel spreadsheet, with advanced sorting and filtering functionality, and graphs, and statistics …

on the Code Metrics window toolbar, and voila! Your results are available in Excel spreadsheet, with advanced sorting and filtering functionality, and graphs, and statistics …